The are many locking techniques out there that can be applied to synchronize access to a shared resource, making the right decision regarding to which technique to use can make the different between application that scales and application that performs poorly, doesn’t scale or even suffers from lock convoys phenomena's.

Since lock based programming is so error prone, Microsoft introduced the STM.NET platform that provides experimental language and runtime features that allow the programmer to declaratively define regions of code that run in isolation, which eliminate the need to use locks altogether. However, until STM.NET will become operational and proven efficient we are stuck with primitive locks - so we might as well learn how to use them.

This post reviews the Monitor class that enables exclusive locking, the 3 versions of the ReaderWriterLock that enable both exclusive and shared locking, the use of context bound synchronized objects that allow only one thread to access an object at a given time, and the immutability approach where object is designed as read-only thus no lock is required on read. We’ll examine the pros and cons of every technique and attempt to spot the cases in which each should be applied.

| Technique | Concurrent Read | Concurrent Read-Write | Concurrent Write |

| Monitor | |||

| Synchronization Domain | |||

| ReaderWriterLock | |||

| Immutability |

In Sasha Goldshtein blog you can read about lock free techniques such as spin lock, Cyclic Lock-Free Buffer (spinners) and thread local cache that can be applied to protect a shared resource while dismantling the performance hit associated with a kernel lock.

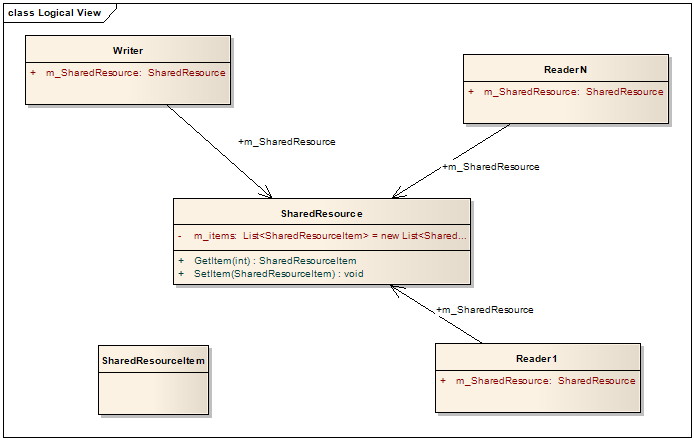

The Object Model

The locking techniques will be demonstrated on a simple object model that consist of shared resource object, single writer object and many reader objects. The SharedResource object encapsulates list of items and allow adding/getting items to/from the list. The Writer object adds items to the shared resource and the Reader objects reads from the list. The Writer and the Readers run from the context of different threads so they may access the shared resource concurrently.

Using a Monitor (lock)

the obvious way to protect the shared resource is to disallow concurrent access to it by using the well known Monitor class (in C# using the lock keyword) which is the managed equivalent of Win32’s critical section. By using the simple one-at-a-time lock only one thread can access the SharedResouce at a time, so there are no simultaneous reading/writing from the list.

Lock Encapsulation

Pay attention that the code snippet above uses a private, not exposed object to lock the critical region. Be careful not to break lock encapsulation by locking with public member, exposed fields, the object itself via ‘lock(this)’ or type object via ‘lock(typeof(T))’. Violating this rule will make your code vulnerable to undetectable dead-locks caused by others that violated the same rule and used your public locks.

Moreover, locking with type objects can even cause deadlock between AppDomains that can be very hard to spot. AppDomains are assumed to be completely isolated, but that is not the case when it comes to ‘marshal-by-bleed’ objects such as type objects of assemblies that were optimized to be loaded across domains (for instance by using the LoaderOptimizationAttribute).

Recursive Acquires

The Monitor lock can be recursively acquired from the same thread, so the following code will not lead to a dead lock.

public class RecursiveLocked { object m_locker = new object(); public void Foo() { lock (m_locker) { // recursion counter = 0 lock (m_locker) { //No dead lock here, recursion counter = 1 } } } } |

Performance

With Monitor, you only start paying the price when lock contention is encountered, since only then kernel object is actually allocated (or reused) to kick the thread off to a waiting state which result in a context switch (that according to Joe Duffy, costs 2,000–8,000 cycles).

In order to avoid the performance hit associated with entering to a waiting state - both Win32 critical sections and CLR Monitor class spins a little while checking that the lock is still required before entering to a waiting state. While the amount of time that win32’s critical section spins is optimized according to the ‘success’ history of previous spins, the CLR Monitor spinning time on the other hand is steady.

Pros

The good thing about the Monitor is that it is very simple to use, understand and maintain. In addition, it is more reliable in the sense that it doesn’t leak due to a thread abort occurring in the middle of a lock (thanks to “hack” in the JIT), unlike the 3 versions of the ReaderWriterLock that will be reviewed shortly.

Cons

Since the Monitor lock is so easy to use – people tend to use it in cases where shared locks (reader/writer) are by far more appropriate, which leads to unavoidable lock convoys. Think about a case where the same action run concurrently and access a shared resource during 10% of the time, while during 1% it writes to it and 9% it reads from it. Since the resource can be safely read concurrently by multiple threads - acquiring an exclusive lock such as the Monitor during the read can unnecessarily block other threads during up to 9% of the time.

ContextBoundObject (Synchronization Context)

Instead of putting lock on every method, we can let the .NET runtime keep the object thread-safe for us. By decorating the class with SynchronizationAttribute and deriving from ContextBoundObject we can instruct the runtime to restrict access to a single thread at one particular time (read more).

The runtime creates synchronization domain for the context bound object and use invocation interception mechanism (utilizing Reflection) in order to lock the object before any method execute and release the lock when method exits. Sadly, this will slow any invocation on the object by one order of magnitude.

Synchronization Context v.s. COM Apartments

COM apartments contain threads and objects. When an object is created within an

apartment, it stays there all its life, forever house-bound along with the resident thread(s). This is similar to an object being contained within a .NET synchronization context, except that a

synchronization context does not own or contain threads. Any thread can call upon an object in any synchronization context – subject to waiting for the exclusive lock. But objects contained within an apartment can only be called upon by a thread within the apartment.

Using a Reader-Writer Lock

ReaderWriterLock (RWL) works best where most accesses are reads, while writes are infrequent and of short duration. With RWL multiple threads can acquire the reader lock (a.k.a shared lock) simultaneously but only one thread can acquire the writer lock (a.k.a exclusive lock), while both reader and writer locks cannot be acquired at the same time. Such being the case, holding reader locks or writer locks for long periods will starve other threads. For best performance, consider restructuring your application to minimize the duration of writes.

Pros

The fact that RWL allows concurrent reads (shared lock) makes it appealing to most parallel applications since most of them read more then they write, so replacing the Monitor lock that allows only exclusive locking with RWL that enables combination of shared locking (for readers) and exclusive locking (for writers) can make the different between application that scales and application that suffers from lock convoys phenomena's.

cons

The problem with the former versions of the RWL (.NET Framework 1-3) is that in case of a ‘hot lock’ (where there’s actual lock contention between the threads thus the locking kernel object is actually allocated or pulled out) it performs much worst than the Monitor lock (about 6 times slower).

Alternatives

The new versions of the .NET Framework (starting from 3.5) introduce the ReaderWriterLockSlim class (purely managed) that does the same but performs better (however, still about 2 times slower than the Monitor lock).

However improved, the implementation of ReaderWriterLockSlim, even when acquiring a read lock, requires an update to a shared location in the lock data structure (using interlocked). When many threads are attempting to acquire the read lock frequently, there can be contention on the cache line holding this shared location. When the critical section is not big enough to “space out” the cost of the lock acquisitions, the resulting cache contention prevents scaling.

The Win32 slim reader/writer lock (SRWL) that is new to Vista and Server 2008 - performs even better than the Monitor lock since it’s not based on the standard kernel object, it is very thin and it executes almost entirely in user mode (so there’s no kernel transitions required to access and manipulate it which spare thousands of cycles, in contrast to almost every other available locks that lazily allocate or reuse kernel object). The price that we pay for SRWL thinness is that unlike ReaderWriterLock and ReaderWriterLockSlim the SRWL cannot be recursively acquired (which may not be so bed after all because it encourages better locking design), cannot be upgraded/downgraded and doesn’t hold information about its owning thread so it can be acquired by one thread and released by the other without yielding a SynchronizationLockException.

Using Immutable objects

An immutable object is an object whose state cannot be modified after it is created. Thus, multiple threads can act on data represented by immutable objects without concern of the data being changed by other threads. To make this work, instead of changing the objects itself - we create new object that reflects the new state.

In this case, we will make the m_items list immutable, so on read (GetItem) we can use it freely without a lock (just read the reference into a local variable which is an atomic operation), and on write we create a new list with the new item - and replace the m_items with the new updated list. Naturally, the cloning and the setting of m_items is done within a lock to prevent data lost (so there's no concurrent writing).

public class SharedResourceImmutable { private object m_writeLock = new object(); List<SharedResourceItem> m_items = new List<SharedResourceItem>(); public SharedResourceItem GetItem(int index) { List<SharedResourceItem> items = m_items; return items[index]; } public void SetItem(SharedResourceItem sharedResourceItem) { lock (m_writeLock) { List<SharedResourceItem> buffer = new List<SharedResourceItem>(); buffer.AddRange(m_items); buffer.Add(sharedResourceItem); m_items = buffer; } } } |

Pros

The great thing about immutability is that the readers are never locked. Think about a case where single resource is being frequently read while the manipulation of the resource takes significant amount of time; using locking mechanism that doesn't allow concurrent write/read will produce many contentions that can drastically harm performance. So in this case - the price that we pay for immutably (cloning on every write) is worthwhile to say the least.

cons

The problem with immutable object is that they have to be cloned every time they change and that they are not so comfortable to write, understand and maintain.

Links

http://igoro.com/archive/overview-of-concurrency-in-net-framework-35/